AWS S3

Destination Connector

Introduction

The following information is a configuration reference for your Amazon S3 (Simple Storage Service) destination connector.

The AWS Identity and Access Management (IAM) web service secures access control to AWS resources. IAM manages granular permissions for authentication and authorization to control which users may sign in and what resources they can access.

AWS uses Amazon Resource Names (ARNs) to identify unique resources and services. Applications must use ARNs in policies to access multiple resources within AWS. ARNs have general and resource-specific formats. More information is available at https://docs.aws.amazon.com/IAM/latest/UserGuide/reference-arns.html.

Overview

An S3 bucket may be the destination of data consumed and transformed by Tarsal. After Tarsal ingests and normalizes data from source connectors, the destination connector writes file data to the designated bucket.

See Considerations for details on destination connector behavior and output.

Setting up an S3 destination connector requires these tasks in the AWS Management Console and the Tarsal portal:

- If setting up a new bucket:

- If using IAM Roles for authentication:

- If using access key credentials for authentication:

- Configuring an S3 destination connector in Tarsal

- Testing the connector

Prerequisites

The connector requires specific permissions for S3 to connect.

Before You BeginConfirm you have AWS console administrative privileges for IAM and S3 before configuration.

Authentication

The S3 destination connector supports these authentication methods:

| Authentication Method | Description | Documentation |

|---|---|---|

| Access Key ID and Access Secret | The access key and secret ID for AWS authentication | Managing access keys for IAM users |

| IAM Role | The permissions role associated with an S3 bucket. | IAM roles |

Permissions

For either authentication method above, the connector requires the actions and access levels below:

| Amazon Service | Action | Access Level | Resource(s) |

|---|---|---|---|

| S3 | PutObject | Write | All objects or specified object(s) |

| S3 | ListBucket | List | All buckets or specified buckets(s) |

| S3 | GetBucketLocation | Read | All buckets or specified buckets(s) |

Considerations

Access Keys vs. IAM Roles

S3 connectors authenticate with either access keys or IAM roles in the Tarsal administrative portal.

Access keys have two parts: an access key ID and a secret access key. Think of the access key ID as the username and the secret access key as the password. The connector creates a token with them for AWS authentication.

Access keys should be saved securely. The secret access key is only retrievable upon creation. If the secret access key is lost, a new one must be generated.

AWS supports several types of IAM roles, and service-linked roles for connector authentication are recommended. A service-linked role is tied to a service (i.e., EC2, S3, RDS) with a specific purpose: to assume a role for performing actions. The service owns service-linked roles.

Access keys require more maintenance; they are easily distributable and should be regularly rotated for security purposes. Removing secret access keys immediately invalidates them, preventing applications from functioning until updated with the new credentials. They typically require code updates and deployments that may increase timelines more than other authentication methods and live longer than IAM roles.

IAM roles provide more security than access keys and have a limited scope of permissions. They’re temporary, centrally managed in the AWS console, and not distributable.

Choosing a Connector Authentication MethodIAM roles are the preferred authentication method for the majority of Tarsal customers. AWS and Tarsal encourage roles instead of keys.

AWS Infrastructure

Using Existing AWS Infrastructure

All configuration instructions assume creating new AWS resources across all services for the S3 destination connector. Reuse of IAM and S3 objects is possible, though not recommended.

If you choose to use existing infrastructure, replace the suggested resource names in this guide with your existing ones where applicable.

Selecting AWS RegionsThe connector's resources and services should reside in the same AWS region.

IAM and Resource Security

The policies presented here provide the connector access to all resources (*) under the necessary services to simplify the configuration process and limit policy maintenance.

AWS and Tarsal recommend defining access levels as restrictively as possible. Limit the connector to only the necessary S3 buckets and actions.

Before You BeginThroughout the configuration, you’ll be copying values for later use. Save them to an easily accessible location for reference.

Create the S3 Bucket

Naming S3 BucketsYou can’t change bucket names after creation! If you want to change the bucket name, create a new bucket with a new name and copy any necessary data over.

If you want to create a new S3 bucket for the connector data destination:

- Sign in to the AWS Management Console at https://console.aws.amazon.com/s3.

- Click the AWS region drop-down list next to your name in the upper right and select the desired region for the bucket.

- Under General Purpose Buckets, click the Create Bucket button.

- Under General Configuration, enter Bucket Name.

- Under Object Ownership, ensure ACLs Disabled is selected.

- Leave the remaining default selections unchanged and click the Create Bucket button.

Create the IAM Policy

IAM policies are allow policies that control permissions for AWS objects. They define and enforce resource access for users, roles, and services. Policies are checked on every request and are agnostic of the operational method used (the AWS Console, CLI, or API).

The policy grants AWS services to associate with the connector’s IAM user and role.

Create the IAM policy with S3 object permissions:

- Sign in to the AWS Management Console at https://console.aws.amazon.com/iam.

- Go to Access Management > Policies from the left navigation.

- Click the Create Policy button in the upper right.

- Click the JSON tab.

- In the Policy Editor

- Delete the existing JSON.

- Copy and paste the following policy:

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": [ "s3:GetObject", "s3:PutObject", "s3:ListBucket" ], "Resource": [ "*" ] } ] } - Click the Next button.

- Under Policy Details, enter

tarsal-s3-destination-connector-policyfor Policy Name. - Click the Create Policy button.

Create the IAM Role

IAM roles are identities with specific permissions and short-lived credentials. Roles are assigned to IAM identities with permissions and trust policies and for access management.

Create a service-linked assumable role, add a custom trust policy for the connector, and attach the permissions policy:

- Sign in to the AWS Management Console at https://console.aws.amazon.com/iam.

- Go to Access Management > Roles from the left navigation.

- Click the Create Role button in the upper right.

- For Trusted Entity Type, select the

Custom Trust Policyradio button.- Sign in to the Tarsal portal at https://app.tarsal.cloud.

- Go to Account > Settings from the left navigation.

- Click the Cloud Tools tab.

- Next to

Sample Trust Policy with Minimum Privileges, click the clipboard icon ( ) on the right to copy the trust policy. The policy includes Tarsal’s AWS account ID to assume the role.

) on the right to copy the trust policy. The policy includes Tarsal’s AWS account ID to assume the role. - Make any changes necessary

- Click the Next button.

- Under Permissions Policies, click the drop-down list under Filter by Type and select

Customer Managed. - Locate

tarsal-s3-destination-connector-policyand select the checkbox to the left of the name. - Click the Next button.

- Under Role Details, enter

tarsal-s3-destination-connector-rolefor Role Name. - Click the Create Role button.

- In the Roles list, click

tarsal-s3-destination-connector-role. - Under Summary, select the Copy ARN button and save the value for retrieval later.

Choosing a Connector Authentication MethodComplete the steps in the next section only if you are authenticating with access keys. Please refer to Access Keys vs. IAM Roles when making a decision.

Create the IAM User

Create an IAM user and assign permissions. This user assumes the role previously created.

- Sign in to the AWS Management Console at https://console.aws.amazon.com/iam.

- Go to Access Management > Users from the left navigation.

- Click the Create User button in the upper right.

- Under User Details, enter

tarsal-s3-destination-connector-userfor User Name. - Click the Next button.

- Under Permissions Options, click the radio button next to

Attach Policies Directly. - Under Permissions Policies, click the drop-down list under Filter by Type and select

Customer Managed. - Locate the

tarsal-s3-destination-connector-policyand select the checkbox to the left of the name. - Click the Next button.

- Click the Create User button.

Complete the following steps only if you plan to authenticate the connector with access keys.

User SecurityAWS best practices recommend assigning permissions to user groups rather than users. The following steps assume a single Tarsal S3 destination connector user; therefore, the instructions attach permissions to the user rather than a user group. However, you may assign the user to a group and apply the IAM policy there.

Generate access keys for previously created user:

- Sign in to the AWS Management Console at https://console.aws.amazon.com/iam.

- Go to Access Management > Users from the left navigation.

- In the Users list, click

tarsal-s3-destination-connector-user. - Click the Security Credentials tab.

- Locate Access Keys and click the Create Access Key button.

- For Use Case, select the

Third-party Serviceradio button. - Check the checkbox under Confirmation.

- Click the Next button.

- For Description Tag Value, enter

Tarsal S3 Destination Connector. - Click the Create Access Key button.

- Click the Download .csv File button.

- Click the Done button after the

.csvdownload.

The keys are added later when configuring connector authentication.

Storing KeysRecord and store the secret access key in a safe place! Lost secret access keys are not recoverable.

If you no longer have your key, deactivate the old key, generate a new one, and update the connector credentials in the Tarsal portal.

AWS Access Key Best Practices

- Never store your access key in plain text, a code repository, or code.

- Disable or delete the access key when no longer needed.

- Enable least-privilege permissions.

- Rotate access keys regularly.

Visit https://docs.aws.amazon.com/IAM/latest/UserGuide/id_credentials_access-keys.html#securing_access-keys for more information.

Configure the S3 Destination Connector

This reference table describes the portal fields required for key and role authentication types. Replace the values in brackets ({}) with the applicable information for your AWS account and resources.

Parameter | Description | Authentication Method | Format | Example | |

|---|---|---|---|---|---|

| Optional | The S3 URL when the bucket is configured as a static website | IAM role Access key ID and secret |

| |

| Required | The name of the S3 bucket storing the destination file(s) | IAM role Access key ID and secret |

|

|

| Optional | The folder within the bucket storing the destination file(s) | IAM role Access key ID and secret |

|

|

| Required | The AWS location of the bucket | IAM role Access key ID and secret |

|

|

| Required if authenticating with IAM Role | The AWS resource name of the role assumed by the connector | IAM role |

|

|

| Required if authenticating with Access Keys | The AWS ID for the S3 key that provides permissions | Access key ID and secret | 16-128 characters |

|

| Required if authenticating with Access Keys | The S3 secret for the AWS S3 key ID | Access key ID and secret | 16-128 characters |

|

| Required | The delimiter in the destination data file(s) | IAM role Access key ID and secret |

|

Add and configure the S3 destination connector based on the chosen authentication method:

- Sign in to the Tarsal portal at https://app.tarsal.cloud.

- Go to Configuration > Destinations from the left navigation.

- Click the Add Destination button in the upper right.

- Click AWS S3.

- Under Metadata, enter

AWS S3for Name. - Under Configuration, enter

tarsal-s3-destinationor your bucket name for S3 Bucket Name. - Click the S3 Bucket Region drop-down list and select the bucket’s AWS region.

- Click the Authentication drop-down list and select your chosen method.

- For

IAM Role Authentication- For Auth Method, paste the IAM Role ARN you previously copied for AWS ARN Role. You can also enter

arn:aws:iam::{AWS_ACCOUNT_ID}:role/tarsal-s3-destination-connector-role. Replace{AWS_ACCOUNT_ID}with your 12-digit AWS account number. Do not enclose the value in brackets.

- For Auth Method, paste the IAM Role ARN you previously copied for AWS ARN Role. You can also enter

- For

Access Key ID and Secret- Open the downloaded file

tarsal-s3-destination-connector-user_accessKeys.csv. - Copy

Access Key IDand paste the value for S3 Key ID. - Copy

Secret Access Keyand paste the value for S3 Access Key.

- Open the downloaded file

- For

- Under Output Format

- Select

JSONL: Newline-delimited JSON.

- Select

- Click the Save button.

The portal immediately notifies you whether the connector configuration is successful with a status banner in the lower right.

If the connector configuration fails, verify all preceding steps or contact Tarsal customer support. See the next section for testing.

Test the Connector

In the portal, hover over the icon in the Health column for the connector in the list on the Destinations page or next to the Status label on the connector’s detail page. A broken heart icon indicates failure, and the Summary widget on the Destinations page also lists destinations errors.

Updating AWS ConfigurationsBe sure to test the connector configuration in the portal after any AWS changes to associated users, roles, policies, or regions.

- Sign in to the Tarsal portal at https://app.tarsal.cloud.

- Go to Configuration > Destinations from the left navigation.

- In the Destinations list, click

. . .(three dots) under the Actions column for the connector. - Select

Testfrom the drop-down list.

A banner in the lower right indicates the success or failure of the connector configuration test.

Alternatively, the connector can be tested directly from the connector destination detail page using the Test button in the upper right.

Considerations

Output Path

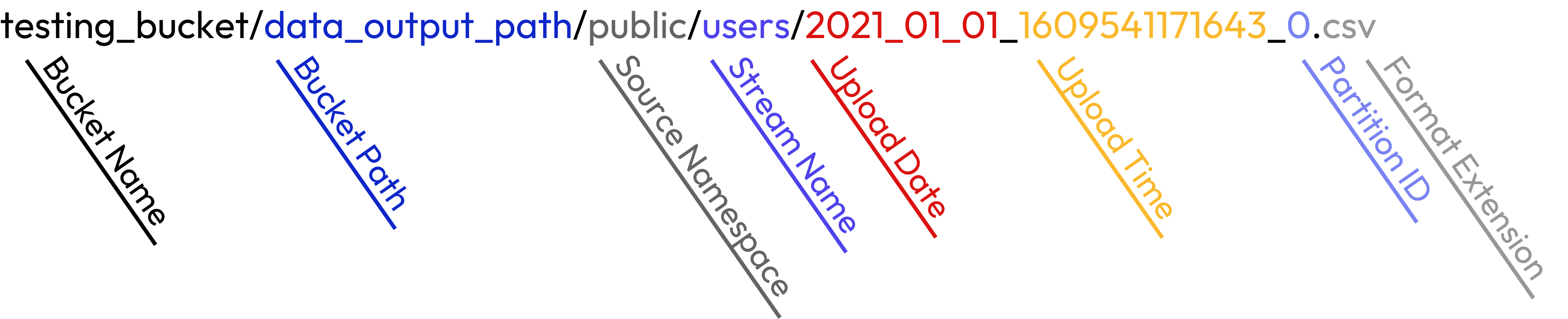

The naming patterns for output paths follow these conventions:

- The upload timestamp is a concatenation of the upload date (

YYYY-MM-DD), upload time (ms), andpartition ID - The path sections and upload date use underscores (

_) as separators - The

partition IDis a UUID

The structure of the full output path for data files is:

>

For example:

Stream PrefixesStream names have prefixes if defined in the connector configuration.

Output Schema

Each stream renders a complete datastore of all output files to the path set in the connector configuration, with one stream per directory.

| Column | Condition | Description |

|---|---|---|

data | Always exists | The log or event data |

_tarsal_metadata | Always exists | Tarsal-defined column for each record; an event processing timestamp and UUID |

| Root-level fields | Root-level normalization (flattening) | Expanded root-level field |

JSON Lines (JSONL)

JSON Lines, or newline-delimited JSON, is a text format for structured data. JSONL has one JSON per line and handles tabular and nested data better than CSV. The output file has the following structure:

{

"_tarsal_metadata": "<json-metadata>"

"data": "<json-data-from-source>"

}An example of JSON source and output follows:

[

{

"user_id": 123,

"name": {

"first": "John",

"last": "Doe"

}

},

{

"user_id": 456,

"name": {

"first": "Jane",

"last": "Roe"

}

}

]{ "_tarsal_metadata": {"_tarsal_ab_id": "0a61de1b-9cdd-4455-a739-93572c9a5f20", "_tarsal_emitted_at": "1631948170000"}, "data": { "user_id": 123, "name": { "first": "John", "last": "Doe" } } }

{ "_tarsal_metadata": {"_tarsal_ab_id": "0a61de1b-9cdd-4455-a739-93572c9a5f20", "_tarsal_emitted_at": "1631948170000"}, "data": { "user_id": 456, "name": { "first": "Jane", "last": "Roe" } } }Updated about 1 year ago